Machine Learning & Computer Vision: Multimodal Human Behavior Understanding in Videos.

Thesis on Self-Supervised 3D Gaze Estimation.

Courses: Computer Vision, NLP, Bayesian Stats, Time Series Analysis.

ML for human perception in the automotive space. Simi is part of ZF LIFETEC, ZF Group.

Multimodal human-centric video understanding (visual attention modeling, hand-object interaction, social interaction) and vision-language models (VLMs).

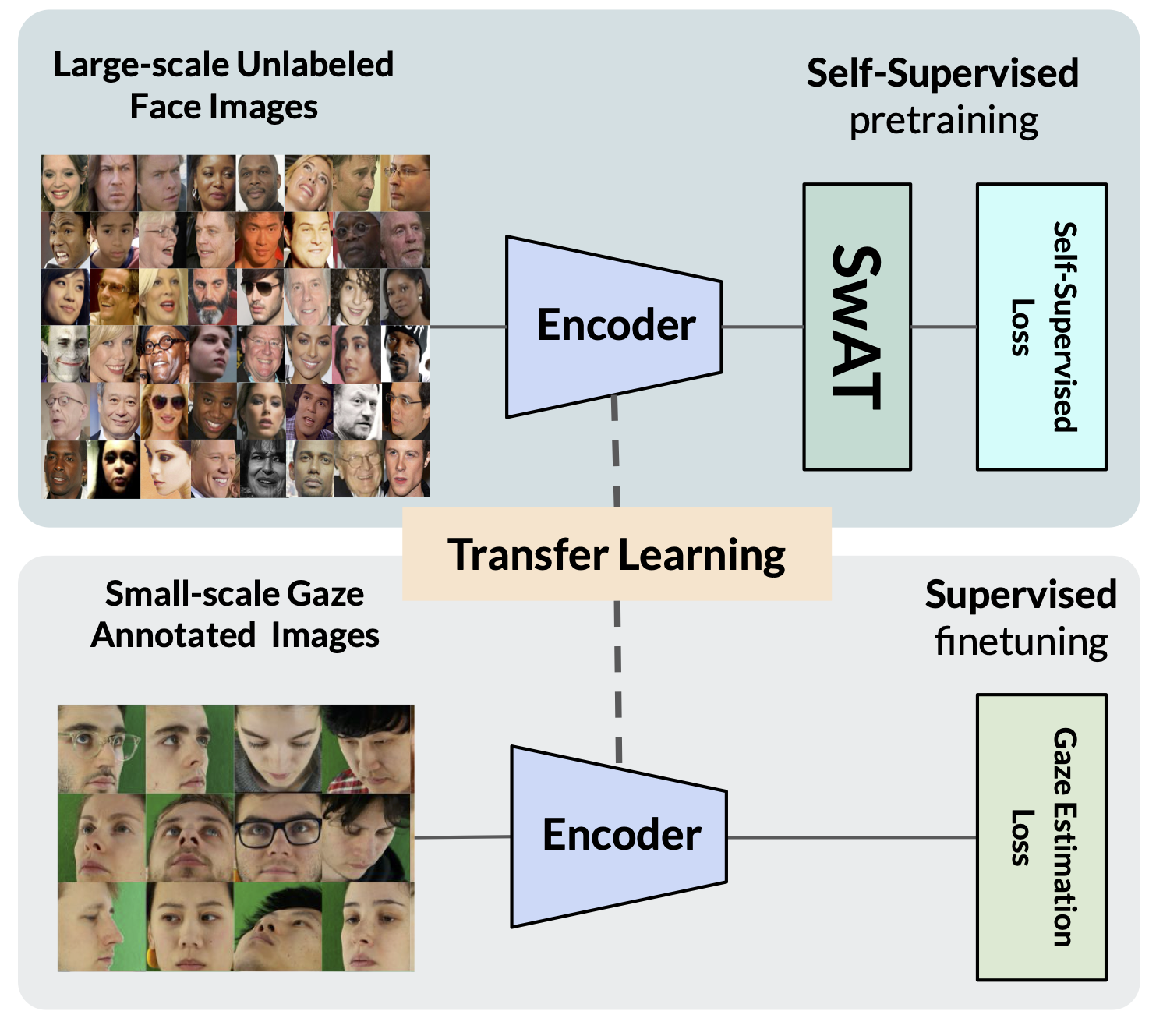

Geometrically equivariant self-supervised representation learning for 3D gaze estimation.

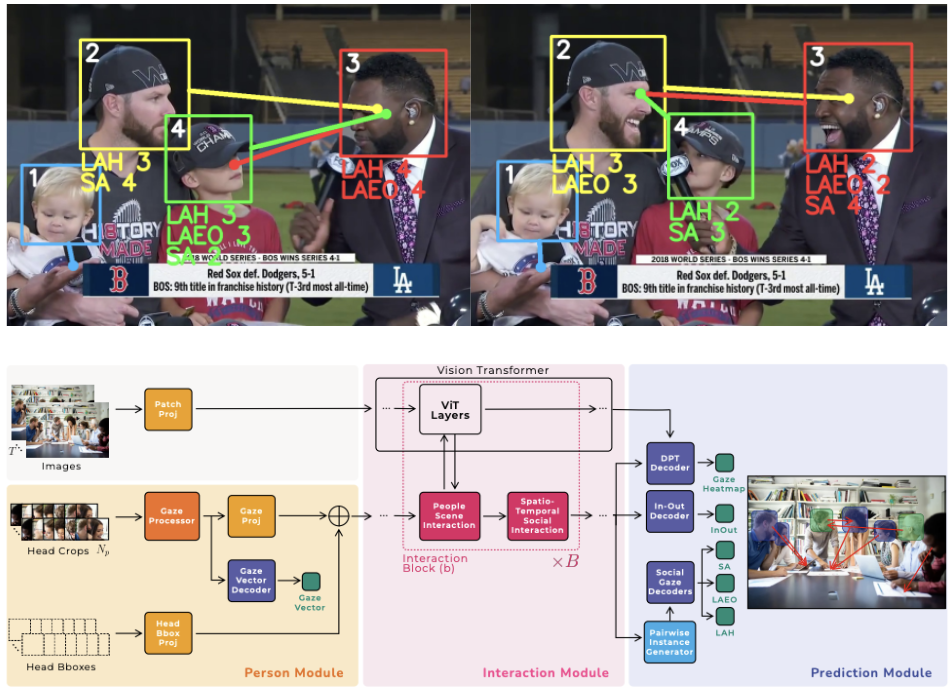

NeurIPS 2024

Anshul Gupta, Samy Tafasca, Arya Farkhondeh, Pierre Vuillecard, Jean-Marc Odobez.

arXiv

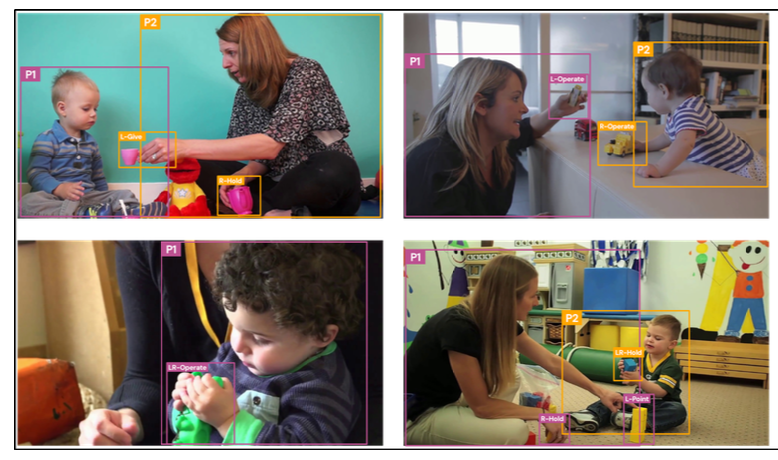

ECCV Workshops 2024

Arya Farkhondeh*, Samy Tafasca*, Jean-Marc Odobez.

arXiv data code

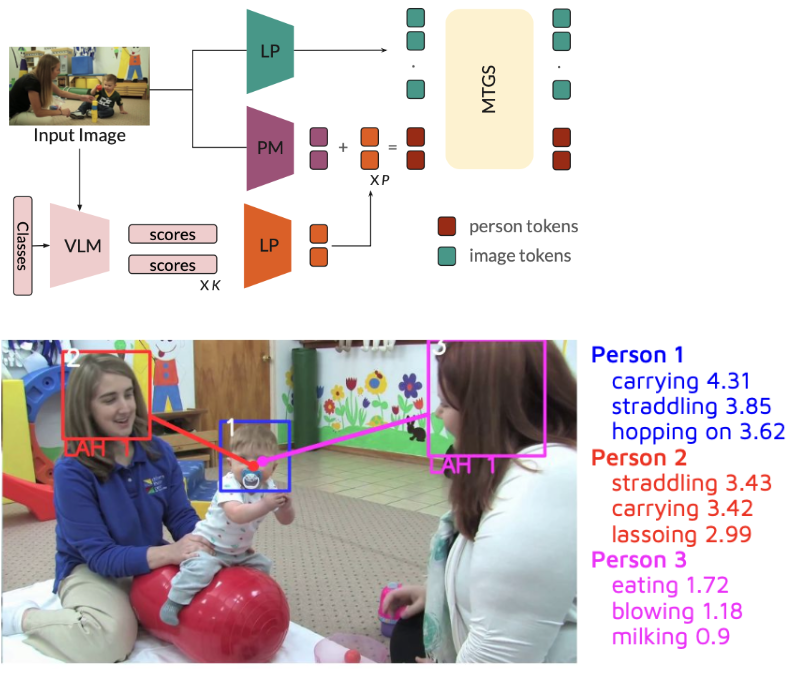

CVPR Workshops 2024 (Nvidia Best Paper Award 🥇)

Anshul Gupta*, Pierre Vuillecard*, Arya Farkhondeh, Jean-Marc Odobez.

arXiv video

BMVC 2022

Arya Farkhondeh, Cristina Palmero, Simone Scardapane, Sergio Escalera.

arXiv code website